Commits on Source (616)

Showing

- .gitignore 0 additions, 1 deletion.gitignore

- .gitlab-ci.yml 4 additions, 5 deletions.gitlab-ci.yml

- README.md 40 additions, 3 deletionsREADME.md

- code/.gitkeep 0 additions, 0 deletionscode/.gitkeep

- docs/1_complex_numbers.md 319 additions, 0 deletionsdocs/1_complex_numbers.md

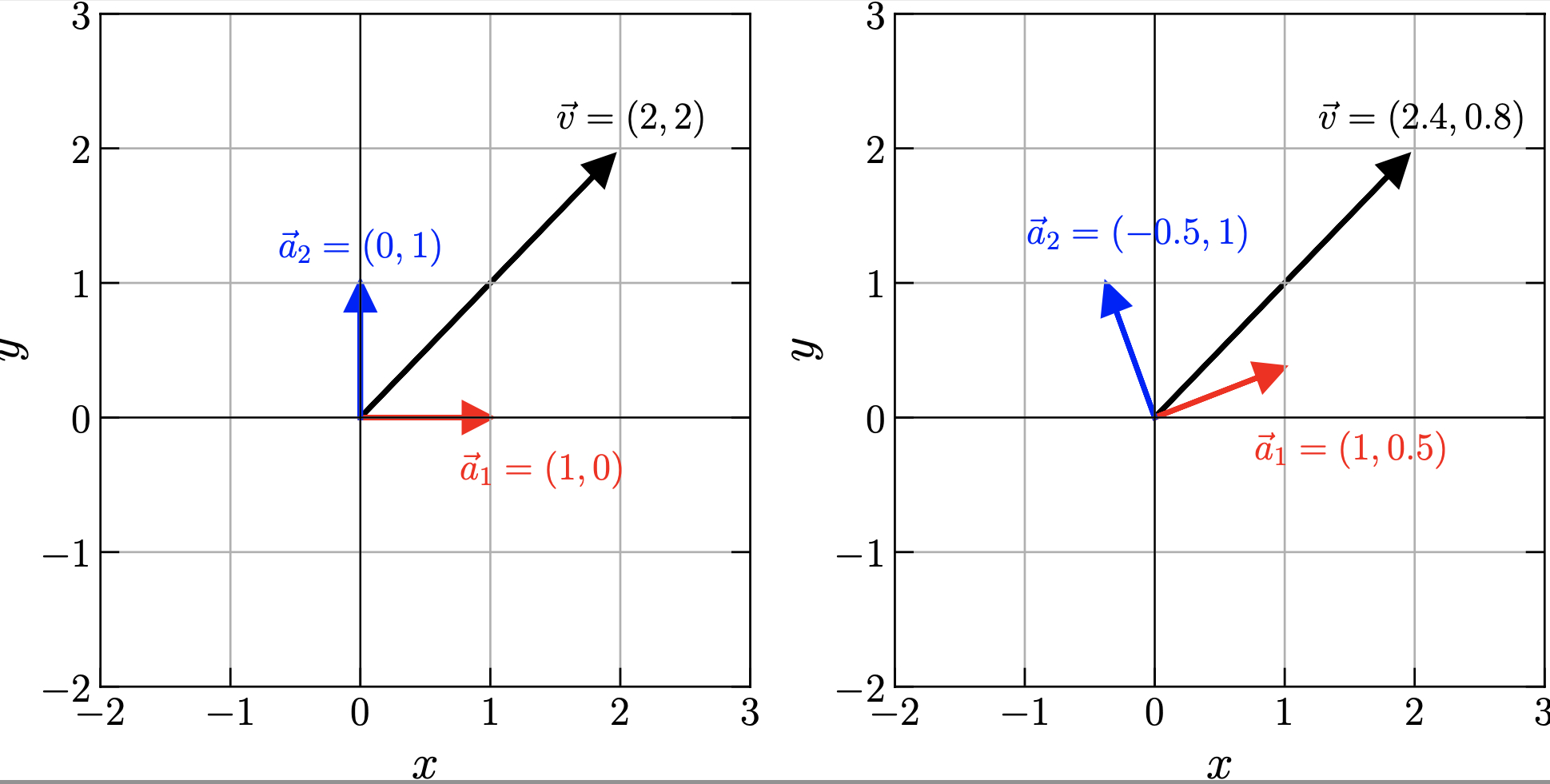

- docs/2_coordinates.md 472 additions, 0 deletionsdocs/2_coordinates.md

- docs/3_vector_spaces.md 223 additions, 0 deletionsdocs/3_vector_spaces.md

- docs/4_vector_spaces_QM.md 357 additions, 0 deletionsdocs/4_vector_spaces_QM.md

- docs/5_operators_QM.md 387 additions, 0 deletionsdocs/5_operators_QM.md

- docs/6_eigenvectors_QM.md 269 additions, 0 deletionsdocs/6_eigenvectors_QM.md

- docs/7_differential_equations_1.md 931 additions, 0 deletionsdocs/7_differential_equations_1.md

- docs/8_differential_equations_2.md 632 additions, 0 deletionsdocs/8_differential_equations_2.md

- docs/code/.keep 0 additions, 0 deletionsdocs/code/.keep

- docs/figures/.keep 0 additions, 0 deletionsdocs/figures/.keep

- docs/figures/3_vector_spaces_1.jpg 0 additions, 0 deletionsdocs/figures/3_vector_spaces_1.jpg

- docs/figures/Coordinates_11_0.svg 810 additions, 0 deletionsdocs/figures/Coordinates_11_0.svg

- docs/figures/Coordinates_13_0.svg 1066 additions, 0 deletionsdocs/figures/Coordinates_13_0.svg

- docs/figures/Coordinates_15_0.svg 2937 additions, 0 deletionsdocs/figures/Coordinates_15_0.svg

- docs/figures/Coordinates_17_0.svg 590 additions, 0 deletionsdocs/figures/Coordinates_17_0.svg

- docs/figures/Coordinates_19_0.svg 763 additions, 0 deletionsdocs/figures/Coordinates_19_0.svg

code/.gitkeep

deleted

100644 → 0

docs/1_complex_numbers.md

0 → 100644

docs/2_coordinates.md

0 → 100644

This diff is collapsed.

docs/3_vector_spaces.md

0 → 100644

docs/4_vector_spaces_QM.md

0 → 100644

This diff is collapsed.

docs/5_operators_QM.md

0 → 100644

This diff is collapsed.

docs/6_eigenvectors_QM.md

0 → 100644

docs/7_differential_equations_1.md

0 → 100644

This diff is collapsed.

docs/8_differential_equations_2.md

0 → 100644

This diff is collapsed.

File moved

File moved

docs/figures/3_vector_spaces_1.jpg

0 → 100644

278 KiB

docs/figures/Coordinates_11_0.svg

0 → 100644

This diff is collapsed.

docs/figures/Coordinates_13_0.svg

0 → 100644

This diff is collapsed.

docs/figures/Coordinates_15_0.svg

0 → 100644

This diff is collapsed.

docs/figures/Coordinates_17_0.svg

0 → 100644

This diff is collapsed.

docs/figures/Coordinates_19_0.svg

0 → 100644

This diff is collapsed.